London to NYC: Building with Llama 4 at AI Hackathon

From immigration queue observation to working AI application in 36 hours

Last week, I flew from London to NYC, built an AI application in 36 hours, won the "Best Use of Llama API" award, and returned to work. The project, Parent Bridge, emerged from a simple observation: language barriers don't just exist at airports—they follow families home when kids need homework help.

Strategic Insight: Idea-First Team Formation

Most hackathons follow a broken pattern: form teams, then scramble for ideas. Pitch your concept first, then let teams organize around it. When I presented Parent Bridge during networking, I could immediately identify genuine excitement versus politeness. The two teammates who joined weren't just available—they were energized by the problem.

This approach delivers:

- Intrinsically motivated team members

- Natural skill alignment

- Shared purpose over convenience

- Skip the "what should we build?" phase entirely

Keep teams to 3-4 people maximum. Beyond that, coordination overhead kills velocity.

Technical Architecture: Understanding Llama 4's Capabilities

The hackathon provided access to Llama 4 models, specifically:

- Llama-4-Scout-17B-16E-Instruct-FP8 (multimodal)

- Llama-4-Maverick-17B-128E-Instruct-FP8 (multimodal)

Critical architectural difference: Llama 4 uses Mixture of Experts (MoE) with early fusion, unlike Llama 3's dense architecture with late fusion. This means text and images are processed together from the beginning, enabling analysis of homework photos with text and mathematical symbols while preserving context.

The 128k token context window allowed processing entire homework assignments, not fragments—crucial for understanding student learning patterns across multiple problems.

Computer Vision Challenge: The Paper Problem

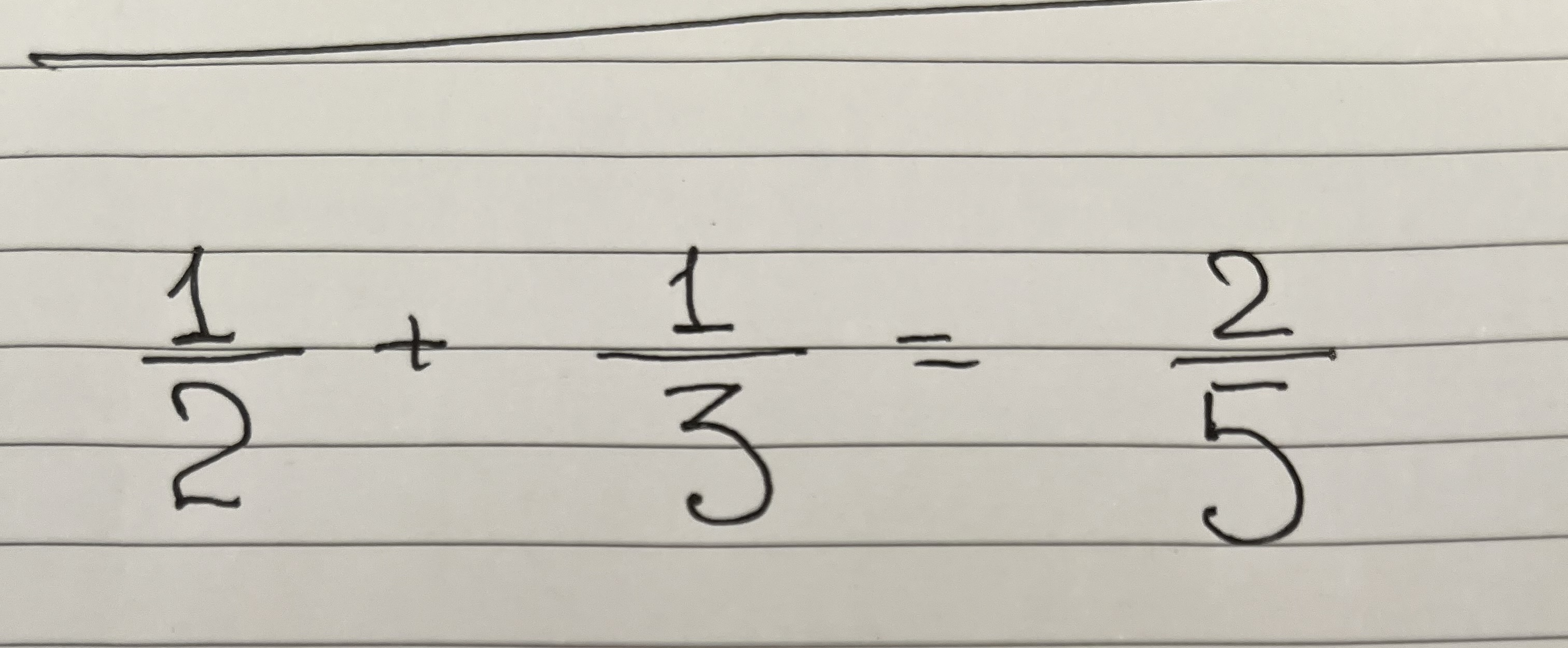

Our initial approach nearly failed. Testing with lined paper homework created visual noise that interfered with mathematical expression recognition. "1/2 + 1/3 = 2/5" became garbled, and fractions were consistently misinterpreted.

Note: Mathematical errors in homework are intentional - the project analyzes student mistakes

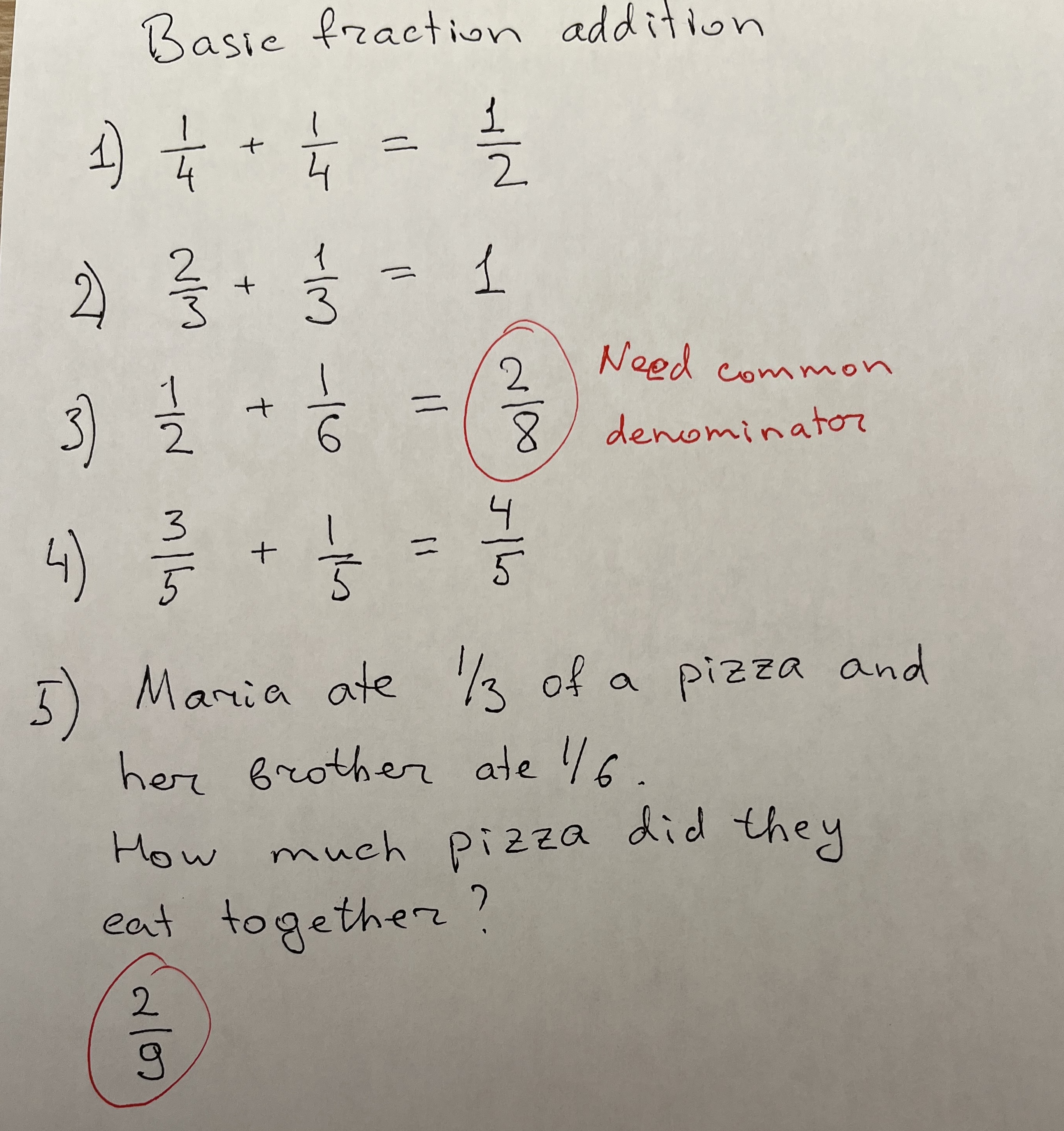

After consulting Meta representatives, we discovered image preprocessing was everything. Switching to plain white paper transformed our results:

Note: Mathematical errors in homework are intentional - the project analyzes student mistakes

Technical lesson: Multimodal models are sensitive to input quality. Image rotation, lighting conditions, and paper choice matter significantly for consistent results. The difference between working and broken demos often comes down to preprocessing details.

Core Technical Innovation

Parent Bridge leverages Llama 4's multilingual capabilities beyond simple translation. Instead of converting text, we generate contextual explanations that help non-English-speaking parents understand not just what their children are learning, but why it matters within their cultural and educational context.

The system:

- Vision processing - scans handwritten homework with high accuracy

- Intelligent analysis - identifies learning gaps and conceptual misunderstandings

- Contextual multilingual output - generates culturally-aware progress reports

Execution Under Pressure

We had dozens of feature ideas: real-time tutoring, progress tracking, school system integration, gamification. We said no to all of them. In 36 hours, your only job is proving your core hypothesis works.

Our ruthless focus: Can AI bridge communication gaps between teachers and non-English-speaking parents by analyzing actual homework? Everything else was noise.